PMFs and PDFs

07-07-2024

It’s another installment in Data Q&A: Answering the real questions with Python. Previous installments are available from the Data Q&A landing page. If you get this post by email, the formatting is not good — you might want to read it on the site. PMFs and PDFsHere’s a question from the Reddit statistics forum.

In Think Bayes I used this kind of discrete approximation in almost every chapter, so this issue came up a lot! There are at least two good solutions:

I’ll demonstrate both. Click here to run this notebook on Colab. BaseballAs an example, I’ll solve an exercise from Chapter 4 of Think Bayes:

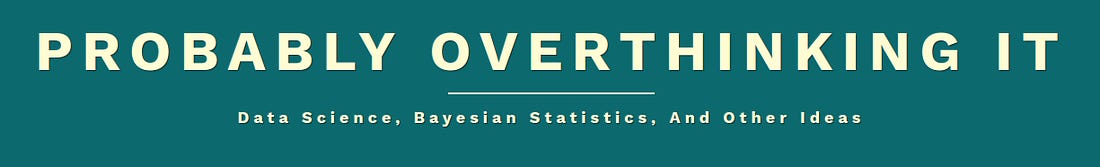

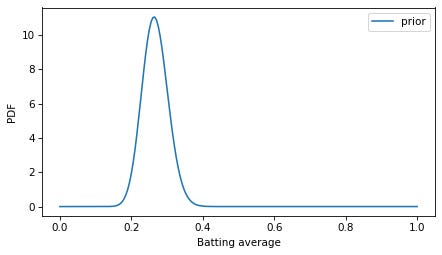

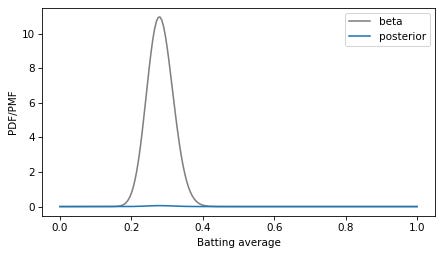

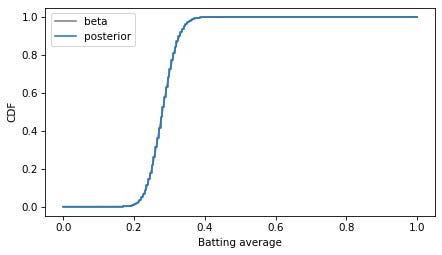

To represent the prior distribution, I’ll use a beta distribution with parameters I chose to be consistent with the expected range of batting averages. In [3]: Out[3]: Here’s what the PDF of this distribution looks like. In [4]: In [5]: Unlike probability masses, probability densities can exceed 1. But the area under the curve should be 1, which we can check using In [6]: Out[6]: Within floating-point error, the area under the PDF is 1. To do the Bayesian update, I’ll put these values in a In [7]: Out[7]: Now we can use In [8]: To compute the posterior distribution, we multiply the prior by the likelihood and normalize again so the sum of the posterior In [9]: Out[9]: The result is a discrete approximation of the actual posterior distribution — so let’s see how close it is. Because the beta distribution is the conjugate prior of the binomial likelihood function, the posterior is also a beta distribution, with parameters updated to reflect the data: 3 successes and 0 failures. In [10]: Here’s what this theoretical posterior distribution looks like compared to our numerical approximation. In [11]: Oops! Something has gone wrong. I assume this is what OP meant by “the pmf doesn’t resemble the pdf at all”. The problem is that the PMF is normalized so the total of the probability masses is 1, and the PDF is normalized so the area under the curve is 1. They are not on the same scale, so the y-axis in this figure is different for the two curves. To fix the problem, we can find the area under the PMF. In [12]: Out[12]: And divide through to create a discrete approximation of the posterior PDF. In [13]: Now we can compare density to density. In [14]: The curves are visually indistinguishable, and the numerical differences are small. In [15]: Out[15]: As an aside, note that the posterior and prior distributions are not very different. The prior mean is 0.267 and the posterior mean is 0.281. If a rookie goes 3 for 3 in their first game, that’s a good start, but it doesn’t mean they are the next Ted Williams. In [16]: Out[16]: As an alternative to comparing PDFs, we could convert the PMF to a CDF, which contains the cumulative sum of the probability masses. In [17]: And compare to the mathematical CDF of the beta distribution. In [18]: In this example, I plotted the discrete approximation of the CDF as a step function — which is technically what it is — to highlight how it overlaps with the beta distribution. DiscussionProbability density is hard to define — the best I can do is usually, “It’s something that you can integrate to get a probability mass”. And when you work with probability densities computationally, you often have to discretize them, which can add another layer of confusion. One way to distinguish PMFs and PDFs is to remember that PMFs are normalized so sum of the probability masses is 1, and PDFs are normalized so the area under the curve is 1. In general, you can’t plot PMFs and PDFs on the same axes because they are not in the same units. Data Q&A: Answering the real questions with Python Copyright 2024 Allen B. Downey License: Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International |