Newsletters From:

Probably Overthinking It | Allen Downey | Substack

A blog about data science, Bayesian statistics, and more. Click to read Probably Overthinking It, by Allen Downey, a Substack publication with thousands of subscribers.

Density and Likelihood: What’s the Difference?

07-14-2024

It’s another installment in Data Q&A: Answering the real questions with Python. Previous installments are available from the Data Q&A landing page. If you get this post by email, the formatting might be broken — if so, you might want to read it on the site. Density and LikelihoodHere’s a question from the Reddit statistics forum.

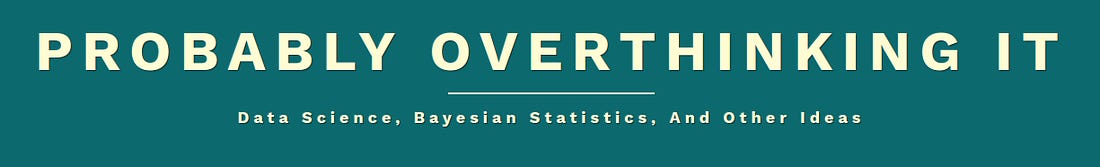

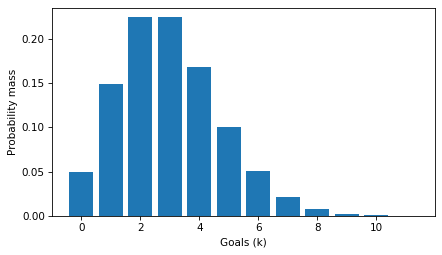

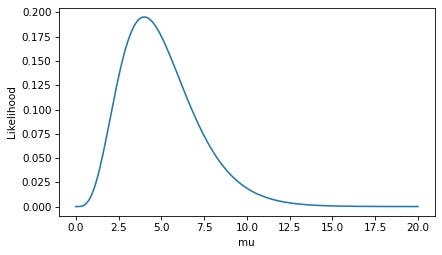

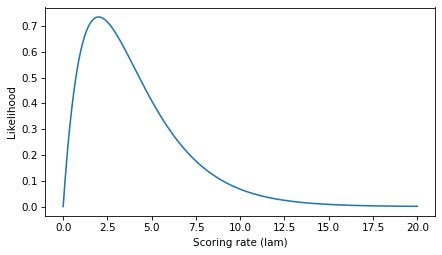

I agree with OP — these topics are confusing and not always explained well. So let’s see what we can do. Click here to run this notebook on Colab. MassI’ll start with a discrete distribution, so we can leave density out of it for now and focus on the difference between a probability mass function (PMF) and a likelihood function. As an example, suppose we know that a hockey team scores goals at a rate of 3 goals per game on average. If we model goal scoring as a Poisson process — which is not a bad model — the number of goals per game follows a Poisson distribution with parameter The PMF of the Poisson distribution tells us the probability of scoring In [4]: Here’s what it looks like. In [5]: The PMF is a function of The values in the distribution are probability masses, so if we want to know the probability of scoring exactly 5 goals, we can look it up like this. In [6]: Out[6]: It’s about 10%. The sum of the In [7]: Out[7]: If we extend the LikelihoodNow suppose we don’t know the goal scoring rate, but we observe 4 goals in one game. There are several ways we can use this data to estimate To find the MLE, we need to maximize the likelihood function, which is a function of In [8]: Here’s what the likelihood function looks like. In [9]: To find the value of In [10]: Out[10]: In this case, the maximum likelihood estimator is equal to the number of goals we observed. That’s the answer to the estimation problem, but now let’s look more closely at those likelihoods. Here’s the likelihood at the maximum of the likelihood function. In [11]: Out[11]: This likelihood is a probability mass — specifically, it is the probability of scoring 4 goals, given that the goal-scoring rate is exactly 4.0. In [12]: Out[12]: So, some likelihoods are probability masses — but not all. DensityNow suppose, again, that we know the goal scoring rate is exactly 3, but now we want to know how long it will be until the next goal. If we model goal scoring as a Poisson process, the time until the next goal follows an exponential distribution with a rate parameter, Because the exponential distribution is continuous, it has a probability density function (PDF) rather than a probability mass function (PMF). We can approximate the distribution by evaluating the exponential PDF at a set of equally-spaced times, SciPy’s implementation of the exponential distribution does not take In [13]: The PDF is a function of In [14]: Notice that the values on the y-axis extend above 1. That would not be possible if they were probability masses, but it is possible because they are probability densities. By themselves, probability densities are hard to interpret. As an example, we can pick an arbitrary element from In [15]: Out[15]: So the probability density at To get something meaningful, we have to compute an area under the PDF. For example, if we want to know the probability that the first goal is scored during the first half of a game, we can compute the area under the curve from We can use a slice index to select the elements of In [16]: Out[16]: The probability of a goal in the first half of the game is about 78%. To check that we got the right answer, we can compute the same probability using the exponential CDF. In [17]: Out[17]: Considering that we used a discrete approximation of the PDF, our estimate is pretty close. This example provides an operational definition of a probability density: it’s something you can add up over an interval — or integrate — to get a probability mass. Likelihood and DensityNow let’s suppose that we don’t know the parameter First we’ll define a range of possible values of In [18]: Then for each value of In [19]: The result is a likelihood function, which is a function of In [20]: Again, we can use In [21]: Out[21]: If the first goal is scored at Now let’s look more closely at those likelihoods. If we select the corresponding element of In [22]: Out[22]: Specifically, it’s the value of the exponential PDF with parameter 2, evaluated at In [23]: Out[23]: So, some likelihoods are probability densities — but as we’ve already seen, not all. DiscussionWhat have we learned?

A PDF is a function of an outcome — like the number of goals scored or the time under the first goal — given a fixed parameter. If you evaluate a PDF, you get a probability density. If you integrate density over an interval, you get a probability mass. A likelihood function is a function of a parameter, given a fixed outcome. If you evaluate a likelihood function, you might get a probability mass or a density, depending on whether the outcome is discrete or continuous. Either way, evaluating a likelihood function at a single point doesn’t mean much by itself. A common use of a likelihood function is finding a maximum likelihood estimator. As OP says, “Probability density and likelihood are not the same thing”, but the distinction is not clear because they are not completely distinct things, either.

I hope that helps! Data Q&A: Answering the real questions with Python Copyright 2024 Allen B. Downey License: Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International |

07-07-2024

PMFs and PDFs

It’s another installment in Data Q&A: Answering the real questions with Python. Previous installments are available from the Data Q&A landing page. If you get this post by email, the formatting is

Read Morefrom Probably Overthinking It | Allen Downey | Substack on 07-02-2024

Regrets and Regression

It’s another installment in Data Q&A: Answering the real questions with Python. Previous installments are available from the Data Q&A landing page. Standardization and Normalization Here’s a questi

Read More07-14-2024

Density and Likelihood: What’s the Difference?

It’s another installment in Data Q&A: Answering the real questions with Python. Previous installments are available from the Data Q&A landing page. If you get this post by email, the formatting mig

Read More